https://drive.google.com/file/d/1tl92l6FouzUuku1GbnNqbYneltVnVZed/view?usp=sharing

PiHole en VM

suite au pb de config pour le dhcp dans kubernetes , je suprime le pod pihole et j’intalle pihole sur une vm ubuntu dans proxmox

Resultat immediat avec 100% de reussite

Pihole pour dhcp

pour utilise le dhcp de pihole il faut le port 67 et 547 pour ipv6

apiVersion: v1

kind: Service

metadata:

name: pihole-dns-dhcp-service

namespace: pihole-ns

spec:

selector:

app: pihole

ports:

- name: dhcp

protocol: UDP

port: 67

targetPort: 67

- name: dhcpv6

protocol: UDP

port: 547

targetPort: 547

- name: dns

protocol: UDP

port: 53

targetPort: 53

type: LoadBalancerhttps://github.com/MoJo2600/pihole-kubernetes/issues/18

pour que le pod pihole puisse fonctionner sur le LAn et pas sur le reseau interne kubernetes

apiVersion: apps/v1

kind: Deployment

metadata:

name: piholeserver

namespace: pihole-ns

labels:

app: pihole

spec:

replicas: 1

selector:

matchLabels:

app: pihole

template:

metadata:

labels:

run: piholeserver

app: pihole

spec:

containers:

- name: piholeserver

image: pihole/pihole:latest

hostNetwork: true

securityContext.privileged: true

env:

- name: "DNS1"

value: "9.9.9.9"

- name: "DNS2"

value: "149.112.112.112"

volumeMounts:

- mountPath: /etc/pihole/

name: pihole-config

- mountPath: /etc/dnsmasq.d/

name: pihole-dnsmasq

volumes:

- name: pihole-config

hostPath:

type: DirectoryOrCreate

path: /usr/kubedata/piholeserver/pihole

- name: pihole-dnsmasq

hostPath:

type: DirectoryOrCreate

path: /usr/kubedata/piholeserver/dnsmasq.dPersistant Volume or Nfs

smb

//192.168.1.40/9-VideoClub /Videoclub cifs uid=0,credentials=/home/david/.smb,iocharset=utf8,noperm 0 0

nfs

mount -t nfs 192.168.1.40:/mnt/Magneto/9-VideoClub /Videoclub

Installer nfs

sudo apt install nfs-commonDistribuer des volumes specifiques par fonction

Monter le PersistantVolumes fonctionel

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-9

spec:

capacity:

storage: 1000Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

storageClassName: slow

mountOptions:

- hard

- nfsvers=4.1

nfs:

path: /mnt/Magneto/9-VideoClub

server: 192.168.1.40on claim le volume fonctionel

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-9

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1000Gi

selector:

matchLabels:

pv: pv-9

puis on monte le pvc dans chaque deployement de pod avec des subpaths

ex:

apiVersion: v1

kind: Pod

metadata:

name: mypod

spec:

containers:

- name: sickchillserver

image: lscr.io/linuxserver/sickchill

volumeMounts:

- mountPath: /config

name: tr-config

- mountPath: /downloads

name: tr-videoclub

subPath: 00-Tmp/sickchill/downloads

- mountPath: /tv

name: tr-videoclub

subPath: 30-Series

- mountPath: /anime

name: tr-videoclub

subPath: 40-Anime

volumes:

- name: tr-videoclub

persistentVolumeClaim:

claimName: pvc-9

- name: tr-config

hostPath:

path: /usr/kubedata/sickchillserver/config

type: DirectoryOrCreate

Autre methode

pour bypasser la declaration d’un persistentVolume on peut declarer le le repertoire NFS directement dans le Deployement/pod

apiVersion: v1

kind: Pod

metadata:

name: mypod

spec:

containers:

- name: sickchillserver

image: lscr.io/linuxserver/sickchill

volumeMounts:

- mountPath: /config

name: tr-config

- mountPath: /downloads

name: tr-videoclub

subPath: 00-Tmp/sickchill/downloads

- mountPath: /tv

name: tr-videoclub

subPath: 30-Series

- mountPath: /anime

name: tr-videoclub

subPath: 40-Anime

volumes:

- name: tr-videoclub

nfs:

server: 192.168.1.40

path: /mnt/Magneto/9-VideoClub

- name: tr-config

hostPath:

path: /usr/kubedata/sickchillserver/config

type: DirectoryOrCreateadd guest agent to truenas

Organisation reseau

Je fais 3 groupes pour mon 192.168.1.*

Dans l’avenir je decouperais en 2 sous reseau (technique / fonctionel et autre)

Technique : 1-99

| Gateway Orange (DHCP desactivé) | 1 |

| Tv Orange | 2 |

| Proxmox | 10 |

| Kubernetes | 20 |

| TrueNas | 40 |

| pihole Dns/dhcp Udp 53/67/547 | 50 |

Fonctionel : 100-199

| Kubernetes Plage IP Service | 100-149 |

| -Kubernetes Dashborad | 100 |

Autre/Pc/IoT : 200-254

sudo nano /etc/netplan/*.yaml

sudo netplan applycle rsa

ajouter la cle public a chaque serveur pour la connection ssh

cat id_rsa_ubuntu.pub >> ~/.ssh/authorized_keys

sudo systemctl restart sshAjout pod Sickchill

deployement

La commande docker avec le filesystem preparé

docker run -d \

--name=sickchill \

-e PUID=1000 \

-e PGID=1000 \

-e TZ=Europe/London \

-p 8081:8081 \

-v /path/to/data:/config \

-v /path/to/data:/downloads \

-v /path/to/data:/tv \

--restart unless-stopped \

lscr.io/linuxserver/sickchilltraduction en kubernetes deploy :

apiVersion: apps/v1

kind: Deployment

metadata:

name: sickchillserver

namespace: default

labels:

app: sickchill

spec:

replicas: 1

selector:

matchLabels:

app: sickchill

template:

metadata:

labels:

run: sickchillserver

app: sickchill

spec:

containers:

- name: sickchillserver

image: lscr.io/linuxserver/sickchill

env:

- name: "PUID"

value: "1000"

- name: "PGID"

value: "1000"

ports:

- containerPort: 8081

name: tr-http

volumeMounts:

- mountPath: /config

name: tr-config

- mountPath: /downloads

name: tr-downloads

- mountPath: /tv

name: tr-tv

- mountPath: /anime

name: tr-anime

volumes:

- name: tr-anime

hostPath:

type: DirectoryOrCreate

path: /Videoclub/40-Anime

- name: tr-tv

hostPath:

type: DirectoryOrCreate

path: /Videoclub/30-Series

- name: tr-downloads

hostPath:

type: DirectoryOrCreate

path: /Videoclub/00-Tmp/sickchill/downloads

- name: tr-config

hostPath:

type: DirectoryOrCreate

path: /usr/kubedata/sickchillserver/config

---

apiVersion: v1

kind: Service

metadata:

name: sickchill-svc

spec:

selector:

app: sickchill

ports:

- name: "http"

port: 8081

targetPort: 8081

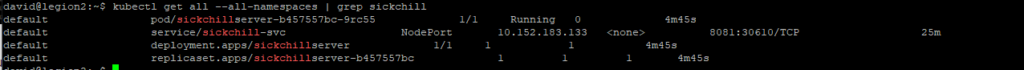

type: NodePortpuis on recupere le recupere le port d’exposition

kubectl get all --all-namespaces | grep sickchill

resultat le dashboard est accessible https://<master-ip>:30610

Modification transmission

Modification du deployment de transmission

volumeMounts:

- mountPath: /downloads-sickchill

name: tr-media-sickchill

- mountPath: /script

name: tr-script

volumes:

- name: tr-media-sickchill

hostPath:

type: DirectoryOrCreate

path: /Videoclub/00-Tmp/sickchill/downloads

- name: tr-script

hostPath:

type: DirectoryOrCreate

path: /Videoclub/00-Tmp/transmission/script

Mettre à jour la config transmission : settings.json

"script-torrent-done-enabled": true,

"script-torrent-done-filename": "/script/transmission-purge-completed_lite.sh",Les scripts du repertoire /script

Pi hole for DNS serveur

arréter le dns embaquer dans ubuntu

sudo systemctl stop systemd-resolved.service

sudo systemctl disable systemd-resolved.service

temporairement forcer le dns d’ubuntu

sur un dns public (8.8.8.8 par exemple)

sudo nano /etc/resolv.confnameserver 8.8.8.8

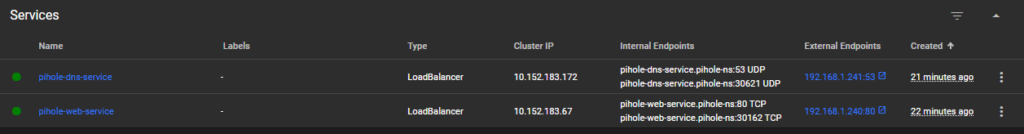

installer un loadbalancer

installer metalLB

https://metallb.universe.tf/installation/

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 192.168.1.1-192.168.1.5,192.168.1.200-192.168.1.250installer les services loadbalancer

apiVersion: v1

kind: Service

metadata:

name: pihole-web-service

namespace: pihole-ns

spec:

selector:

app: pihole

ports:

- name: web

protocol: TCP

port: 80

targetPort: 80

type: LoadBalancer

---

apiVersion: v1

kind: Service

metadata:

name: pihole-dns-service

namespace: pihole-ns

spec:

selector:

app: pihole

ports:

- name: dns

protocol: UDP

port: 53

targetPort: 53

type: LoadBalancer

remettre le dns d’ubuntu

sur le dns pihole (192.168.1.241)

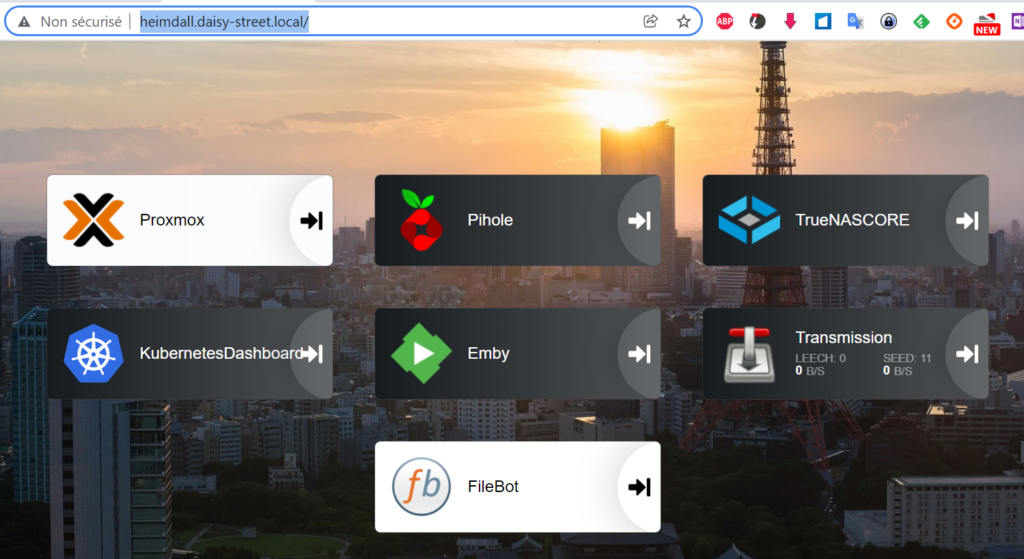

sudo nano /etc/resolv.confnameserver 192.168.1.241heimdall.daisy-street.local

Service

Passer le service heimdall de nodeport ClusterIP

Ingress

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: heimdall-svc-ingress

namespace: default

spec:

ingressClassName: public

rules:

- host: heimdall.daisy-street.local

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: heimdall-svc

port:

number: 80Hosts

192.168.1.26 heimdall.daisy-street.local Resultat