internet , metallb , ingress , service , pod

Inbound

traffic

++ +---------+

|| |ConfigMap|

|| +--+------+

|| |

|| | CIDR range to provision

|| v

|| +--+----------+

|| |MetalLB | +-------+

|| |Load balancer| |Ingress|

|| +-+-----------+ +---+---+

|| | |

|| | External IP assigned |Rules described in spec

|| | to service |

|| v v

|| +--+--------------------+ +---+------------------+

|| | | | Ingress Controller |

|---->+ ingress-nginx service +----->+ (NGINX pod) |

+---->| +----->+ |

+-----------------------+ +----------------------+

||

VV

+-----------------+

| Backend service |

| (app-lb) |

| |

+-----------------+

||

VV

+--------------------+

| Backend pod |

| (httpbin) |

| |

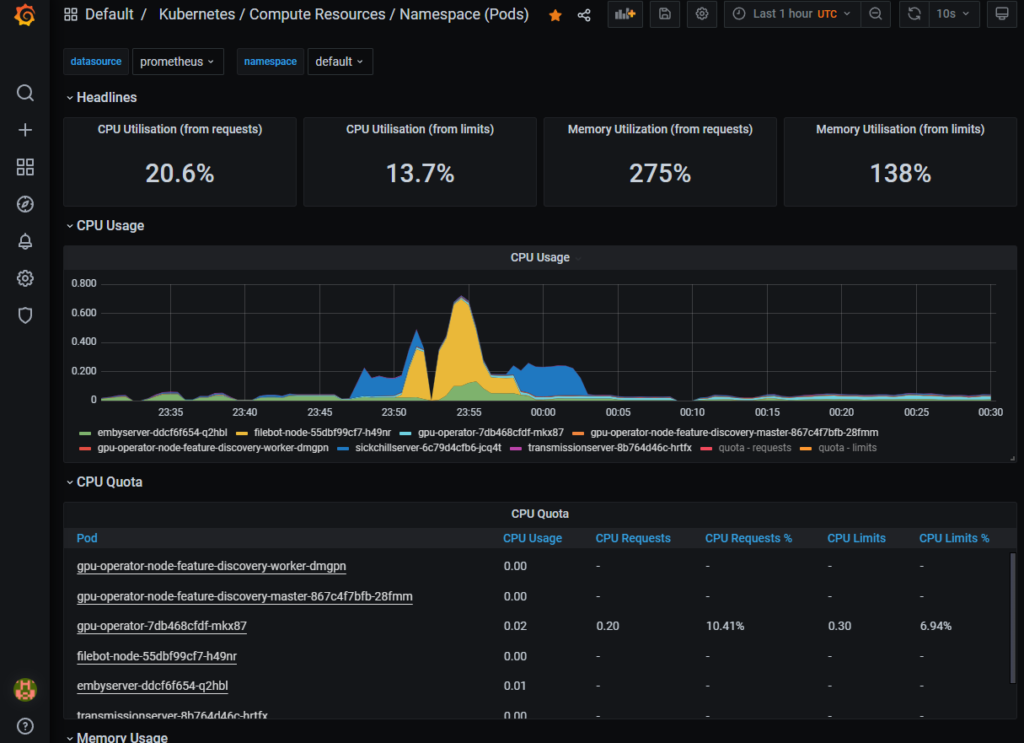

+--------------------+Installation complete de kubernetes , minikube , prometheus et grafana (et pods)

On recommence kubernetes

A force de triturer les parametre de la VM et du kubernetes , j’ai briqué mon dashboard et la CPU est constament a 60% au repos.

Je decide de recree un VM Ubunutu a partir de mon template et d’essayer minikube a la place de microk8s

Les pods que je desire :

- emby

- sickchill

- transmission

- filebot

- prometheus

- grafana

Clonage d’un VM Ubunutu

Je clone en Full mon template d’ubuntu

Passer en IP Static

sudo nano /etc/netplan/00-installer-config.yamlnetwork:

version: 2

renderer: networkd

ethernets:

ens18:

dhcp4: no

addresses: [192.168.1.20/24]

gateway4: 192.168.1.1

nameservers:

addresses: [192.168.1.1]recuperation de mon filesytem

sous PVE:

Je monte la VM de mon precedant clone microk8s dans ma VM minikube afin de recopier le contenu dans la nouvelle VM.

nano /etc/pve/qemu-server/105.conf

scsi1: cyclops:vm-100-disk-0,size=32G

scsi2: cyclops:vm-105-disk-0,size=32G

dans ma nouvelle VM (id:105) :

je cherche mes 2 disk(100;1005)

ls -lh /dev/disk/by-id/ata-QEMU_DVD-ROM_QM00001 -> ../../sr0

ata-QEMU_DVD-ROM_QM00003 -> ../../sr1

scsi-0QEMU_QEMU_HARDDISK_drive-scsi0 -> ../../sda

scsi-0QEMU_QEMU_HARDDISK_drive-scsi0-part1 -> ../../sda1

scsi-0QEMU_QEMU_HARDDISK_drive-scsi0-part2 -> ../../sda2

scsi-0QEMU_QEMU_HARDDISK_drive-scsi1 -> ../../sdc

scsi-0QEMU_QEMU_HARDDISK_drive-scsi1-part1 -> ../../sdc1

scsi-0QEMU_QEMU_HARDDISK_drive-scsi2 -> ../../sdb

le scsi2 (disk 105) n’a pas de parttion , j’en crée une

sudo fdisk /dev/sdbformaté la partition

sudo mkfs.ext4 /dev/sdb1creation des repertoires de montage

sudo mkdir /usr/kubedata

sudo mkdir /usr/old_kubedataj’ajoute en montage automatique mon nouveau disk(105)

sudo nano /etc/fstab

/dev/disk/by-id/scsi-0QEMU_QEMU_HARDDISK_drive-scsi2-part1 /usr/kubedata ext4 defaults 0 0

sudo mount -aet je monte en manuel l’ancien disk(100)

sudo mount /dev/sdc1 /usr/old_kubedata/copie du contenu de l’ancien disk(100) dans le nouveau disk(105)

sudo cp -r /usr/old_kubedata/* /usr/kubedata/demontage de l’ancien disk (100)

sudo umount /usr/old_kubedata/

sudo rm /usr/old_kubedata/ -Rsous PVE:

Je demonte l’ancien disk(100) de ma nouvelle VM(105)

nano /etc/pve/qemu-server/105.confsupression de la ligne

scsi1: cyclops:vm-100-disk-0,size=32GInstallation de minikube

j’install Docker 1

sudo apt-get update

sudo apt-get install \

ca-certificates \

curl \

gnupg \

lsb-release

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt-get update

sudo apt-get install docker-ce docker-ce-cli containerd.ioj’installe minikube 2

curl -LO https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64

sudo install minikube-linux-amd64 /usr/local/bin/minikube

sudo usermod -aG docker $USER && newgrp docker

minikube start

minikube kubectl -- get po -A

nano ./.bashrc

alias kubectl="minikube kubectl --"modifier l’editeur par defaut

sudo nano /etc/environmentKUBE_EDITOR="/usr/bin/nano"Install Prometheus and Grafana

source 3

install Helm

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3

chmod 700 get_helm.sh

./get_helm.shhelm versionInstall Prometheus and Grafana on Kubernetes using Helm 3

helm repo add stable https://charts.helm.sh/stable

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm install stable prometheus-community/kube-prometheus-stackkubectl edit svc stable-kube-prometheus-sta-prometheusChanger ClusterIP pour LoadBalancer/NodePort

kubectl edit svc stable-grafanaChanger ClusterIP pour LoadBalancer/NodePort

Web Grafana

UserName: admin

Password: prom-operatorsinon récupéré le password grafana

kubectl get secret --namespace default grafana -o jsonpath="{.data.admin-password}" | base64 --decode ; echoInstall SickChill

apiVersion: apps/v1

kind: Deployment

metadata:

name: sickchillserver

namespace: default

labels:

app: sickchill

spec:

replicas: 1

selector:

matchLabels:

app: sickchill

template:

metadata:

labels:

run: sickchillserver

app: sickchill

spec:

containers:

- name: sickchillserver

image: lscr.io/linuxserver/sickchill

env:

- name: "PUID"

value: "1000"

- name: "PGID"

value: "1000"

ports:

- containerPort: 8081

name: tr-http

volumeMounts:

- mountPath: /config

name: tr-config

- mountPath: /downloads

name: tr-videoclub

subPath: 00-Tmp/sickchill/downloads

- mountPath: /tv

name: tr-videoclub

subPath: 30-Series

- mountPath: /anime

name: tr-videoclub

subPath: 40-Anime

volumes:

- name: tr-videoclub

nfs:

server: 192.168.1.40

path: /mnt/Magneto/9-VideoClub

- name: tr-config

hostPath:

type: DirectoryOrCreate

path: /usr/kubedata/sickchillserver/config

---

apiVersion: v1

kind: Service

metadata:

name: sickchill-svc

spec:

selector:

app: sickchill

ports:

- name: "http"

port: 8081

targetPort: 8081

type: NodePortInstall Transmission

apiVersion: apps/v1

kind: Deployment

metadata:

name: transmissionserver

namespace: default

labels:

app: transmission

spec:

replicas: 1

selector:

matchLabels:

app: transmission

template:

metadata:

labels:

run: transmissionserver

app: transmission

spec:

containers:

- name: transmissionserver

image: lscr.io/linuxserver/transmission

env:

- name: "PUID"

value: "1000"

- name: "PGID"

value: "1000"

ports:

- containerPort: 9091

name: tr-http

- containerPort: 51413

name: tr-https

volumeMounts:

- mountPath: /config

name: tr-config

- mountPath: /downloads-sickchill

name: tr-media-sickchill

- mountPath: /script

name: tr-script

- mountPath: /watch

name: tr-watch

volumes:

- name: tr-config

hostPath:

type: DirectoryOrCreate

path: /usr/kubedata/transmissionserver/config

- name: tr-media-sickchill

hostPath:

type: DirectoryOrCreate

path: /Videoclub/00-Tmp/sickchill/downloads

- name: tr-script

hostPath:

type: DirectoryOrCreate

path: /Videoclub/00-Tmp/transmission/script

- name: tr-watch

hostPath:

type: DirectoryOrCreate

path: /Videoclub/00-Tmp/transmission/watch

---

apiVersion: v1

kind: Service

metadata:

name: transmission

spec:

selector:

app: transmission

ports:

- name: "http"

port: 9091

targetPort: 9091

- name: "https"

port: 51413

targetPort: 51413

type: NodePortInstall Emby

apiVersion: apps/v1

kind: Deployment

metadata:

name: embyserver

namespace: default

labels:

app: emby

spec:

replicas: 1

selector:

matchLabels:

app: emby

template:

metadata:

labels:

run: embyserver

app: emby

spec:

containers:

- name: embyserver

image: emby/embyserver:latest

env:

- name: "UID"

value: "1000"

- name: "GID"

value: "100"

- name: "GIDLIST"

value: "100"

ports:

- containerPort: 8096

name: emby-http

- containerPort: 8920

name: emby-https

volumeMounts:

- mountPath: /config

name: emby-config

- mountPath: /mnt/videoclub

name: emby-media

volumes:

- name: emby-media

nfs:

server: 192.168.1.40

path: /mnt/Magneto/9-VideoClub

- name: emby-config

hostPath:

type: DirectoryOrCreate

path: /usr/kubedata/embyserver/config

---

apiVersion: v1

kind: Service

metadata:

name: emby

spec:

selector:

app: emby

ports:

- name: "http"

port: 8096

targetPort: 8096

- name: "https"

port: 8920

targetPort: 8920

type: NodePortInstall FileBot

apiVersion: apps/v1

kind: Deployment

metadata:

name: filebot-node

namespace: default

labels:

app: filebot

spec:

replicas: 1

selector:

matchLabels:

app: filebot

template:

metadata:

labels:

run: filebot-node

app: filebot

spec:

containers:

- name: filebot-node

image: maliciamrg/filebot-node-479

ports:

- containerPort: 5452

name: filebot-http

volumeMounts:

- mountPath: /data

name: filebot-data

- mountPath: /videoclub

name: filebot-media

volumes:

- name: filebot-data

hostPath:

type: DirectoryOrCreate

path: /usr/kubedata/filebot-node/data

- name: filebot-media

nfs:

server: 192.168.1.40

path: /mnt/Magneto/9-VideoClub

---

apiVersion: v1

kind: Service

metadata:

name: filebot

spec:

selector:

app: filebot

ports:

- name: "http"

port: 5452

targetPort: 5452

type: NodePortResultat

david@legion2:~$ kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default alertmanager-stable-kube-prometheus-sta-alertmanager-0 2/2 Running 2 (4h21m ago) 14h 172.17.0.2 minikube <none> <none>

default embyserver-56689875b4-wmxww 1/1 Running 0 53m 172.17.0.12 minikube <none> <none>

default filebot-node-7786dfbf67-fh7s8 1/1 Running 0 47m 172.17.0.13 minikube <none> <none>

default prometheus-stable-kube-prometheus-sta-prometheus-0 2/2 Running 2 (4h21m ago) 14h 172.17.0.7 minikube <none> <none>

default sickchillserver-7494d84848-cwjkm 1/1 Running 0 4h15m 172.17.0.8 minikube <none> <none>

default stable-grafana-5dcdf4bbc6-q5shg 3/3 Running 3 (4h21m ago) 14h 172.17.0.3 minikube <none> <none>

default stable-kube-prometheus-sta-operator-5fd44cc9bf-nmgdq 1/1 Running 1 (4h21m ago) 14h 172.17.0.6 minikube <none> <none>

default stable-kube-state-metrics-647c4868d9-f9vrb 1/1 Running 2 (4h19m ago) 14h 172.17.0.5 minikube <none> <none>

default stable-prometheus-node-exporter-j6w5f 1/1 Running 1 (4h21m ago) 14h 192.168.49.2 minikube <none> <none>

default transmissionserver-7d5d8c49db-cxktx 1/1 Running 0 62m 172.17.0.11 minikube <none> <none>

ingress-nginx ingress-nginx-admission-create--1-nzdhc 0/1 Completed 0 3h51m 172.17.0.10 minikube <none> <none>

ingress-nginx ingress-nginx-admission-patch--1-mxxmc 0/1 Completed 1 3h51m 172.17.0.9 minikube <none> <none>

ingress-nginx ingress-nginx-controller-5f66978484-w8cqj 1/1 Running 0 3h51m 172.17.0.9 minikube <none> <none>

kube-system coredns-78fcd69978-cq2hn 1/1 Running 1 (4h21m ago) 15h 172.17.0.4 minikube <none> <none>

kube-system etcd-minikube 1/1 Running 1 (4h21m ago) 15h 192.168.49.2 minikube <none> <none>

kube-system kube-apiserver-minikube 1/1 Running 1 (4h21m ago) 15h 192.168.49.2 minikube <none> <none>

kube-system kube-controller-manager-minikube 1/1 Running 1 (4h21m ago) 15h 192.168.49.2 minikube <none> <none>

kube-system kube-ingress-dns-minikube 1/1 Running 0 3h44m 192.168.49.2 minikube <none> <none>

kube-system kube-proxy-d8m7r 1/1 Running 1 (4h21m ago) 15h 192.168.49.2 minikube <none> <none>

kube-system kube-scheduler-minikube 1/1 Running 1 (4h21m ago) 15h 192.168.49.2 minikube <none> <none>

kube-system storage-provisioner 1/1 Running 4 (4h19m ago) 15h 192.168.49.2 minikube <none> <none>

metallb-system controller-66bc445b99-wvdnq 1/1 Running 0 3h44m 172.17.0.10 minikube <none> <none>

metallb-system speaker-g49dw 1/1 Running 0 3h44m 192.168.49.2 minikube <none> <none>

david@legion2:~$ kubectl get svc -A -o wide

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

default alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 14h app.kubernetes.io/name=alertmanager

default emby LoadBalancer 10.101.254.121 192.168.1.102 8096:30524/TCP,8920:30171/TCP 55m app=emby

default filebot LoadBalancer 10.106.51.20 192.168.1.103 5452:31628/TCP 48m app=filebot

default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 15h <none>

default prometheus-operated ClusterIP None <none> 9090/TCP 14h app.kubernetes.io/name=prometheus

default sickchill-svc LoadBalancer 10.107.60.50 192.168.1.100 8081:32026/TCP 4h16m app=sickchill

default stable-grafana LoadBalancer 10.102.236.29 192.168.1.104 80:31801/TCP 15h app.kubernetes.io/instance=stable,app.kubernetes.io/name=grafana

default stable-kube-prometheus-sta-alertmanager ClusterIP 10.105.89.179 <none> 9093/TCP 15h alertmanager=stable-kube-prometheus-sta-alertmanager,app.kubernetes.io/name=alertmanager

default stable-kube-prometheus-sta-operator ClusterIP 10.99.183.242 <none> 443/TCP 15h app=kube-prometheus-stack-operator,release=stable

default stable-kube-prometheus-sta-prometheus NodePort 10.110.38.166 <none> 9090:32749/TCP 15h app.kubernetes.io/name=prometheus,prometheus=stable-kube-prometheus-sta-prometheus

default stable-kube-state-metrics ClusterIP 10.104.176.119 <none> 8080/TCP 15h app.kubernetes.io/instance=stable,app.kubernetes.io/name=kube-state-metrics

default stable-prometheus-node-exporter ClusterIP 10.106.253.56 <none> 9100/TCP 15h app=prometheus-node-exporter,release=stable

default transmission LoadBalancer 10.104.43.182 192.168.1.101 9091:31067/TCP,51413:31880/TCP 64m app=transmission

ingress-nginx ingress-nginx-controller NodePort 10.107.183.72 <none> 80:31269/TCP,443:30779/TCP 3h52m app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

ingress-nginx ingress-nginx-controller-admission ClusterIP 10.97.189.150 <none> 443/TCP 3h52m app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 15h k8s-app=kube-dns

kube-system stable-kube-prometheus-sta-coredns ClusterIP None <none> 9153/TCP 15h k8s-app=kube-dns

kube-system stable-kube-prometheus-sta-kube-controller-manager ClusterIP None <none> 10257/TCP 15h component=kube-controller-manager

kube-system stable-kube-prometheus-sta-kube-etcd ClusterIP None <none> 2379/TCP 15h component=etcd

kube-system stable-kube-prometheus-sta-kube-proxy ClusterIP None <none> 10249/TCP 15h k8s-app=kube-proxy

kube-system stable-kube-prometheus-sta-kube-scheduler ClusterIP None <none> 10251/TCP 15h component=kube-scheduler

kube-system stable-kube-prometheus-sta-kubelet ClusterIP None <none> 10250/TCP,10255/TCP,4194/TCP 14h <none>

prometheus et grafana

“do it simple”

Apres avoir desintaller les precedante version de prometheus et grafana.

j’installe l’addons

microk8s enable prometheus

dans le pod grafana je modifie la password admin

grafana-cli admin reset-admin-password adminApres log

Delete multiple pod

kubectl get pod -n kubernetes-dashboard | grep Evicted | awk '{print $1}' | xargs kubectl delete pod -n kubernetes-dashboard

kubectl get pods --all-namespaces | grep Evicted | awk '{print $2," -n ",$1}' | xargs kubectl delete podChanger l’éditeur pour nano

Linux

On Linux (Ubuntu, for example), typically the default command-line EDITOR is Vim. If so, no further action is needed to use the kubectl edit command. If you want to use a different editor, create an environment variable named KUBE_EDITOR with the value set to the path of your preferred text editor.

JAVA_HOME

1.1 Edit /etc/environment file with a text editor like vim or nano, need root or sudo.

Add JAVA_HOME at the next line, and points to a specified JDK folder directly.

PATH=”/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games” JAVA_HOME=/usr/lib/jvm/adoptopenjdk-11-hotspot-amd64

source /etc/environment

echo $JAVA_HOME

Note

The new changes will disappear if we close the current session or reopen a new terminal because a new shell does not trigger the /etc/environment. Try to restart the Ubuntu or login again; the new changes in /etc/environment will apply automatically.

whereis nano

nano: /usr/bin/nano /usr/share/nano /usr/share/man/man1/nano.1.gz

sudo nano /etc/environmentKUBE_EDITOR="/usr/bin/nano"share gpu

https://3os.org/guides/proxmox/pgu-passthrough-to-vm/

la CM et le CPU son non compatible Iommu

tant pis

Monitoring

Installer Helm

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm repo update

kubectl delete namespace monitoring

kubectl delete crd alertmanagerconfigs.monitoring.coreos.com

kubectl delete crd alertmanagers.monitoring.coreos.com

kubectl delete crd podmonitors.monitoring.coreos.com

kubectl delete crd probes.monitoring.coreos.com

kubectl delete crd prometheuses.monitoring.coreos.com

kubectl delete crd prometheusrules.monitoring.coreos.com

kubectl delete crd servicemonitors.monitoring.coreos.com

kubectl delete crd thanosrulers.monitoring.coreos.com

kubectl create namespace monitoring

helm install kube-prometheus-stack prometheus-community/kube-prometheus-stack -n monitoring --debug --set prometheusOperator.admissionWebhooks.enabled=false --set prometheusOperator.tls.enabled=falseGrafana

Recupere le user et mdp pour grafana

sudo kubectl get secret --namespace monitoring prometheus-grafana -o yamlAjouter un service loadbalancer pour acces via lan

apiVersion: v1

kind: Service

metadata:

name: grafana-web-service

namespace: monitoring

spec:

selector:

app: kube-prometheus-stack-grafana

ports:

- name: web

protocol: TCP

port: 80

targetPort: 80

type: LoadBalancerIf you are using Prometheus Operator then user/pass is:

user: admin

pass: prom-operatorErreur

J’ai eu l’erreur

Error: INSTALLATION FAILED: Kubernetes cluster unreachable: Get "http://localhost:8080/version?timeout=32s": dial tcp [::1]:8080: connect: connection refused

pour la coriger il faut que le user ai le parametre de kube dans son home , pour ce faire :

kubectl config view --raw > ~/.kube/config

chmod go-r ~/.kube/config

ce qui creera un fichier config tel que :

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: ---certif---

server: https://---ip---:---port---

name: microk8s-cluster

contexts:

- context:

cluster: microk8s-cluster

user: ---nomuser---

name: microk8s

current-context: microk8s

kind: Config

preferences: {}

users:

- name: ---nomuser---

user:

token: ---token---

source :

https://stash.run/docs/v2021.6.18/guides/latest/monitoring/prometheus_operator/

Strategie de sauvegarde

https://drive.google.com/file/d/1tl92l6FouzUuku1GbnNqbYneltVnVZed/view?usp=sharing

PiHole en VM

suite au pb de config pour le dhcp dans kubernetes , je suprime le pod pihole et j’intalle pihole sur une vm ubuntu dans proxmox

Resultat immediat avec 100% de reussite