Conclusion

When you are using NFS mount points with root account on client-side then export them with no_root_squash option. This will ensure you don’t face access related issues on NFS mount points

When you are using NFS mount points with root account on client-side then export them with no_root_squash option. This will ensure you don’t face access related issues on NFS mount points

NFS by default will downgrade any files created with the root permissions to the nobody:nogroup user:group.

This is a security feature that prevents privileges from being shared unless specifically requested.

It may be that you would like to enable the “no_root_squash” option in the nfs server’s /etc/exports file.

https://forum.proxmox.com/threads/mount-nfs-shares-in-a-host.78761/

pour le lxc du mediacenter qui est monter en unpriviliged

jai monte rle nfs du videoclub dans pve

puis j’ai ajouter dans le /etx/pve/lxc/105.conf

mp0: /mnt/pve/videoclub,mp=/usr/VideoClubsmb

//192.168.1.40/9-VideoClub /Videoclub cifs uid=0,credentials=/home/david/.smb,iocharset=utf8,noperm 0 0

nfs

mount -t nfs 192.168.1.40:/mnt/Magneto/9-VideoClub /Videoclub

sudo apt install nfs-commonMonter le PersistantVolumes fonctionel

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-9

spec:

capacity:

storage: 1000Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

storageClassName: slow

mountOptions:

- hard

- nfsvers=4.1

nfs:

path: /mnt/Magneto/9-VideoClub

server: 192.168.1.40on claim le volume fonctionel

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-9

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1000Gi

selector:

matchLabels:

pv: pv-9

puis on monte le pvc dans chaque deployement de pod avec des subpaths

ex:

apiVersion: v1

kind: Pod

metadata:

name: mypod

spec:

containers:

- name: sickchillserver

image: lscr.io/linuxserver/sickchill

volumeMounts:

- mountPath: /config

name: tr-config

- mountPath: /downloads

name: tr-videoclub

subPath: 00-Tmp/sickchill/downloads

- mountPath: /tv

name: tr-videoclub

subPath: 30-Series

- mountPath: /anime

name: tr-videoclub

subPath: 40-Anime

volumes:

- name: tr-videoclub

persistentVolumeClaim:

claimName: pvc-9

- name: tr-config

hostPath:

path: /usr/kubedata/sickchillserver/config

type: DirectoryOrCreate

pour bypasser la declaration d’un persistentVolume on peut declarer le le repertoire NFS directement dans le Deployement/pod

apiVersion: v1

kind: Pod

metadata:

name: mypod

spec:

containers:

- name: sickchillserver

image: lscr.io/linuxserver/sickchill

volumeMounts:

- mountPath: /config

name: tr-config

- mountPath: /downloads

name: tr-videoclub

subPath: 00-Tmp/sickchill/downloads

- mountPath: /tv

name: tr-videoclub

subPath: 30-Series

- mountPath: /anime

name: tr-videoclub

subPath: 40-Anime

volumes:

- name: tr-videoclub

nfs:

server: 192.168.1.40

path: /mnt/Magneto/9-VideoClub

- name: tr-config

hostPath:

path: /usr/kubedata/sickchillserver/config

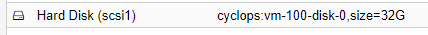

type: DirectoryOrCreatePasser 32Go de la ZFS de Proxmox au ubuntu qui host kubernetes

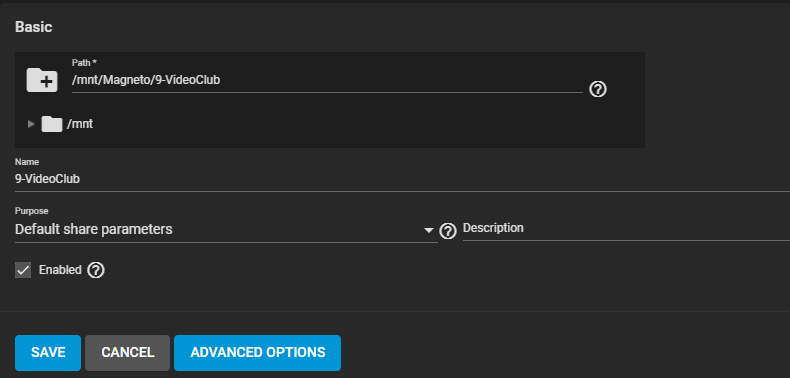

Dans TrueNas exposer /mnt/Magneto/9-VideoClub en SMB

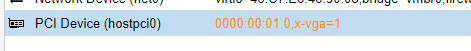

Passer la carte Graphic a ubuntu

installation de Cifs et edition de fstab pour monter automatiquement le share truenas

sudo apt install cifs-utilssudo nano /etc/fstab

//192.168.1.46/9-VideoClub /Videoclub cifs uid=0,credentials=/home/david/.smb,iocharset=utf8,noperm 0 0

Cree une partion sur le disk de 32Go provenant de Proxmox via fdisk , formater en ext4 et monter cette partion sur le filesystem /usr/kubedata

sudo nano /etc/fstab

/dev/disk/by-id/scsi-0QEMU_QEMU_HARDDISK_drive-scsi1-part1 /usr/kubedata ext4 defaults 0 0

La commande docker avec le filesystem preparé

sudo docker run -d \

--name embyserver \

--volume /usr/kubedata/embyserver/config:/config \

--volume /Videoclub:/mnt/videoclub \

--net=host \

--device /dev/dri:/dev/dri \

--publish 8096:8096 \

--publish 8920:8920 \

--env UID=1000 \

--env GID=100 \

--env GIDLIST=100 \

emby/embyserver:latesttraduction en kubernetes deploy :

apiVersion: apps/v1

kind: Deployment

metadata:

name: embyserver

namespace: default

labels:

app: emby

spec:

replicas: 1

selector:

matchLabels:

app: emby

template:

metadata:

labels:

run: embyserver

app: emby

spec:

containers:

- name: embyserver

image: emby/embyserver:latest

env:

- name: "UID"

value: "1000"

- name: "GID"

value: "100"

- name: "GIDLIST"

value: "100"

ports:

- containerPort: 8096

name: emby-http

- containerPort: 8920

name: emby-https

volumeMounts:

- mountPath: /config

name: emby-config

- mountPath: /mnt/videoclub

name: emby-media

volumes:

- name: emby-media

hostPath:

type: Directory

path: /Videoclub

- name: emby-config

hostPath:

type: DirectoryOrCreate

path: /usr/kubedata/embyserver/config

---

apiVersion: v1

kind: Service

metadata:

name: emby

spec:

selector:

app: emby

ports:

- name: "http"

port: 8096

targetPort: 8096

- name: "https"

port: 8920

targetPort: 8920

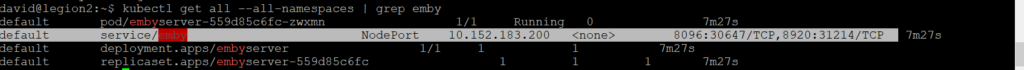

type: NodePortpuis on recupere le recupere le port d’exposition

kubectl get all --all-namespaces | grep emby

resultat le dashboard est accecible https://<master-ip>:30647