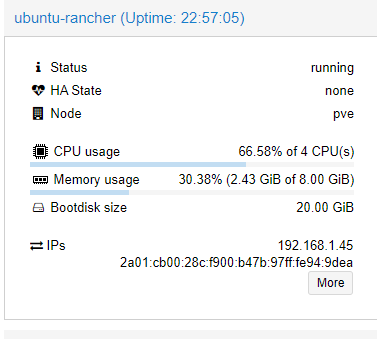

On my server (with docker) i have sometime the space of the root directory to 0%

df -hFilesystem Size Used Avail Use% Mounted on

tmpfs 86M 11M 75M 13% /run

/dev/sda2 20G 20G 0 100% /

tmpfs 476M 0 476M 0% /dev/shm

tmpfs 5,0M 0 5,0M 0% /run/lock

192.212.40.6:/6-40-SystemSvg 227G 32G 184G 15% /SystemSvg

192.212.40.6:/9-VideoClub 1,8T 774G 967G 45% /VideoClub

tmpfs 146M 8,0K 146M 1% /run/user/1000docker clean non essential stuff

docker system prune -a

docker volume rm $(docker volume ls -qf dangling=true)

docker system prune --all --volumes --forceempty trash

rm -rf ~/.local/share/Trash/*or

sudo apt install trash-clitrash-emptysystem clean sweep

sudo apt-get autoremove

sudo apt-get clean

sudo apt-get autoclean

find big stuff in file system

sudo du -h --max-depth=1 | sort -h0 ./dev

0 ./proc

0 ./sys

4,0K ./cdrom

4,0K ./media

4,0K ./mnt

4,0K ./srv

4,0K ./VideoClub

16K ./lost+found

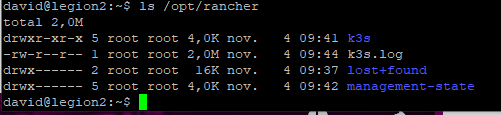

16K ./opt

52K ./root

60K ./home

68K ./tmp

1,3M ./run

6,7M ./etc

428M ./boot

823M ./SystemSvg

1,7G ./snap

4,7G ./var

9,9G ./usr

20G .

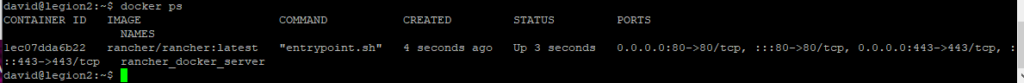

limit log in container

<service_name>

logging:

options:

max-size: "10m"

max-file: "5"/etc/docker/daemon.json

{

"log-opts": {

"max-size": "10m",

"max-file": "5"

}

}dont forget the “,” if they have allready param in daemon.json